Case Study of Reducing 5xx Errors and Improving Crawlability and Indexability of Website

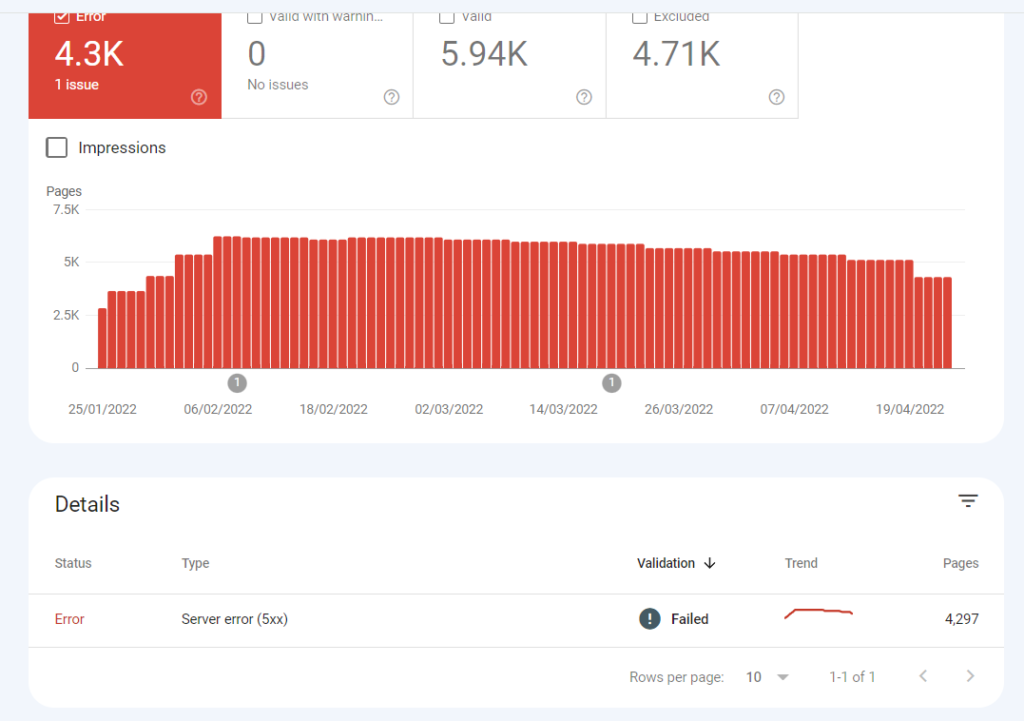

In January 2022, I came across a website that had lots of 500 errors (around 6000) or server errors and due to this the crawlability and indexability of new content is affected badly.

Skype Chat Screenshot

In this case study, I will explain how with the help of the development team and simple technical SEO knowledge the errors are reduced from 6K to 80.

It was a fairly big site (in the sports niche) and the errors do increase in my presence but I am thankful to the trust and the patience of the site owner.

However, if I have to sum up the whole thing then it will be – Article Schema Data Markup and Redirection.

Before

Now

Now, let me show you the whole journey.

Auditing the Website

As every SEO does, I audited the website using screaming frog and all things seem fine to me. All pages with meta tags, heading tags, cool graphics (although size was high) and other on page things look good.

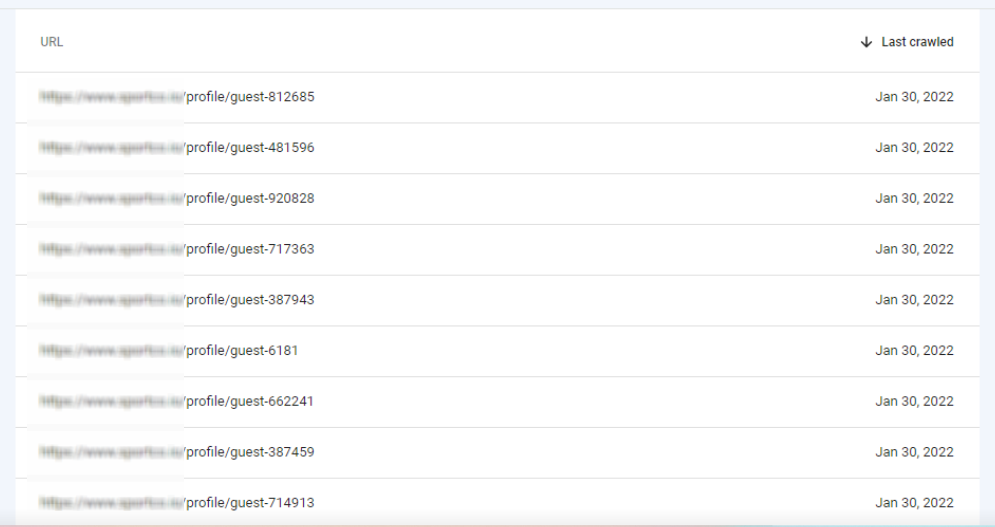

Now I started looking at the errors in the console and one remarkable thing I noted is that most errors have one thing in common.

Here is a screenshot.

So, I immediately consulted with the dev team and they suggested url redirection and implemented it and all urls are now working.

But the real problem is that the new content was not being crawled and ranked at a lower position by search engines.

Meanwhile the validation in the console has also been started but still bots are not indexing new content. They are simply ignoring it.

With so many errors on the website, the googlebot may have considered this site of lower quality.

The next thing is to impress the bots.

I analyzed the website again from the technical SEO point of view and found that it lacks schema data.

It’s not easy to persuade the tech team to create a system in which whenever a page is created, the schema data will be automatically implemented in code.

They procrastinate, meanwhile the site was in the same condition.

Now, I decided to manually give schema data of newly updated articles by myself and upload it in the code of the website. I did this for a month or so and the website crawlability and indexability improved in March and was evident by decrease in errors.

Now we have proof that errors could be minimized and with this web development team was convinced to implement automatic schema on every webpage.

Currently, the website still has lots of flaws and I am working on it but it was a good learning experience and as a SEO one has to be humble and keep training oneself.